Neural Networks with PyTorch¶

PyTorch is an open-source machine learning framework. It is an optimized tensor library for deep learning using GPUs and CPUs. PyTorch is a machine learning tool developed by Facebook's AI division to process large-scale object detection, segmentation, classification, etc. PyTorch provides a core data structure, the tensor, a multi-dimensional array that shares many similarities with NumPy arrays.

Please check the software modules list via

marie@login$ module spider pytorch

to find out, which PyTorch modules are available.

We recommend using the cluster Alpha, Capella and/or Power9 when

working with machine learning workflows and the PyTorch library.

You can find detailed hardware specification in our

hardware documentation.

PyTorch Console¶

On the cluster alpha, load the module environment:

# Job submission on alpha nodes with 1 gpu on 1 node with 800 Mb per CPU

marie@login.alpha$ srun --gres=gpu:1 -n 1 -c 7 --pty --mem-per-cpu=800 bash

marie@alpha$ module load release/23.04 GCCcore/11.3.0 GCC/11.3.0 OpenMPI/4.1.4 Python/3.10.4

Module GCC/11.3.0, OpenMPI/4.1.4, Python/3.10.4 and 21 dependencies loaded.

marie@alpha$ module load PyTorch/1.12.1-CUDA-11.7.0

Module PyTorch/1.12.1-CUDA-11.7.0 and 42 dependencies loaded.

Torchvision on the cluster alpha

On the cluster alpha, the module torchvision is not yet available within the module

system. (19.08.2021)

Torchvision can be made available by using a virtual environment:

marie@alpha$ virtualenv --system-site-packages python-environments/torchvision_env

marie@alpha$ source python-environments/torchvision_env/bin/activate

marie@alpha$ pip install torchvision --no-deps

Using the --no-deps option for "pip install" is necessary here as otherwise the PyTorch version might be replaced and you will run into trouble with the CUDA drivers.

On the cluster Power9:

# Job submission in power nodes with 1 gpu on 1 node with 800 Mb per CPU

marie@login.power9$ srun --gres=gpu:1 -n 1 -c 7 --pty --mem-per-cpu=800 bash

After calling

marie@login.power9$ module spider pytorch

we know that we can load PyTorch (including torchvision) with

marie@power9$ module load release/23.04 GCC/11.3.0 OpenMPI/4.1.4 torchvision/0.13.1

Modules GCC/11.3.0, OpenMPI/4.1.4, torchvision/0.13.1 and 62 dependencies loaded.

Now, we check that we can access PyTorch:

marie@power9 python -c "import torch; print(torch.__version__)"

The following example shows how to create a python virtual environment and import PyTorch.

# Create folder

marie@power9$ mkdir python-environments

# Check which python are you using

marie@power9$ which python

/sw/installed/Python/3.7.4-GCCcore-8.3.0/bin/python

# Create virtual environment "env" which inheriting with global site packages

marie@power9$ virtualenv --system-site-packages python-environments/env

[...]

# Activate virtual environment "env". Example output: (env) bash-4.2$

marie@power9$ source python-environments/env/bin/activate

marie@power9$ python -c "import torch; print(torch.__version__)"

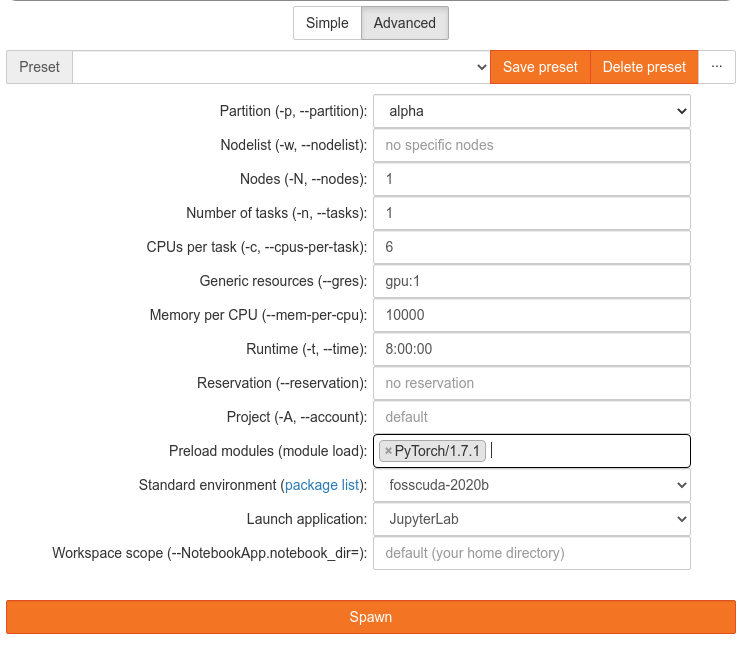

PyTorch in JupyterHub¶

In addition to using interactive and batch jobs, it is possible to work with PyTorch using JupyterHub. The production and test environments of JupyterHub contain Python kernels, that come with a PyTorch support.

Distributed PyTorch¶

For details on how to run PyTorch with multiple GPUs and/or multiple nodes, see distributed training.

Migrate PyTorch-script from CPU to GPU¶

It is recommended to use GPUs when using large training data sets. While TensorFlow automatically uses GPUs if they are available, in PyTorch you have to move your tensors manually.

First, you need to import torch.CUDA:

import torch.CUDA

Then you define a device-variable, which is set to 'CUDA' automatically when a GPU is available

with this code:

device = torch.device('CUDA' if torch.CUDA.is_available() else 'cpu')

You then have to move all of your tensors to the selected device. This looks like this:

x_train = torch.FloatTensor(x_train).to(device)

y_train = torch.FloatTensor(y_train).to(device)

Remember that this does not break backward compatibility when you port the script back to a computer

without GPU, because without GPU, device is set to 'cpu'.

Caveats¶

Moving Data Back to the CPU-Memory¶

The CPU cannot directly access variables stored on the GPU. If you want to use the variables, e.g.,

in a print statement or when editing with NumPy or anything that is not PyTorch, you have to move

them back to the CPU-memory again. This then may look like this:

cpu_x_train = x_train.cpu()

print(cpu_x_train)

...

error_train = np.sqrt(metrics.mean_squared_error(y_train[:,1].cpu(), y_prediction_train[:,1]))

Remember that, without .detach() before the CPU, if you change cpu_x_train, x_train will also

be changed. If you want to treat them independently, use

cpu_x_train = x_train.detach().cpu()

Now you can change cpu_x_train without x_train being affected.

Speed Improvements and Batch Size¶

When you have a lot of very small data points, the speed may actually decrease when you try to train them on the GPU. This is because moving data from the CPU-memory to the GPU-memory takes time. If this occurs, please try using a very large batch size. This way, copying back and forth only takes places a few times and the bottleneck may be reduced.